Image credit: Unsplash

Image credit: Unsplash

Abstract

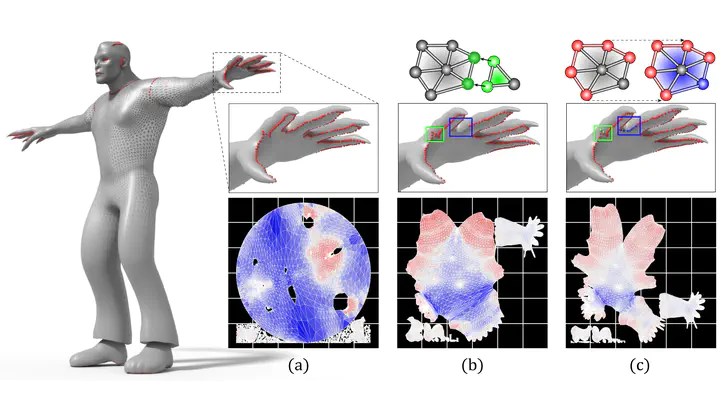

Recently there has been a significant effort to automate UV mapping, the process of mapping 3D-dimensional surfaces to the UV space while minimizing distortion and seam length. Although state-of-the-art methods, Autocuts and OptCuts, addressed this task via energy-minimization approaches, they fail to produce semantic seam styles, an essential factor for professional artists. The recent emergence of Graph Neural Networks (GNNs), and the fact that a mesh can be represented as a particular form of a graph, has opened a new bridge to novel graph learning-based solutions in the computer graphics domain. In this work, we use the power of supervised GNNs for the first time to propose a fully automated UV mapping framework that enables users to replicate their desired seam styles while reducing distortion and seam length. To this end, we provide augmentation and decimation tools to enable artists to create their dataset and train the network to produce their desired seam style. We provide a complementary post-processing approach for reducing the distortion based on graph algorithms to refine low-confidence seam predictions and reduce seam length (or the number of shells in our supervised case) using a skeletonization method.

Supplementary notes can be added here, including code, math, and images.