Biography

I’m a Manager and Principal Research Scientist in the AI Lab at Autodesk Research. My research focuses primarily on current challenges in computer graphics by combining machine learning with numerical methods for the M&E space.

Prior to joining Autodesk, I received my B.Eng. and M.Eng., both in Computer Engineer at the École de technologie supérieure (ÉTS), supervised by Eric Paquette, and my Ph.D. in Computer Science from the Université de Montréal, advised by Pierre Poulin, where I explored ways to improve numerical simulations for particle-based fluids by leveraging hybrid and data-driven methods using iterative solvers.

- Physics-Based Animation

- Fluid Simulations

- Machine Learning for 3D

-

Ph.D. in Computer Science, 2021

Université de Montréal

-

M.Eng. in Computer Engineering, 2015

École de technologie supérieure (ÉTS)

-

B.Eng. in Computer Engineering, 2012

École de technologie supérieure (ÉTS)

Publications

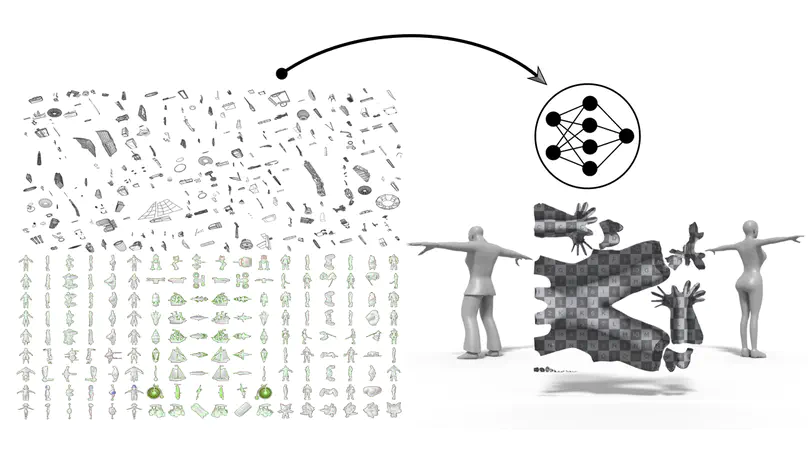

Partitioning a polygonal mesh can be challenging. Many applications require decomposing such structures for further processing in computer graphics. In the last decade, several methods were proposed to tackle this problem, at the cost of intensive computational times. Recently, machine learning has proven to be effective for the segmentation task on 3D structures. Nevertheless, the state-of-the-art methods introduced using deep learning to handle are hardly generalizable and require dividing the learned model into several specific classes of objects to avoid overfitting. We present a deep learning approach leveraging deep learning to encode a mapping function prior to mesh segmentation for multiple applications. Our network reproduces a mapping using our knowledge of the Shape Diameter Function (SDF) method to generate such a correspondence using similarities among vertex neighborhoods. Using our predicted mapping, we can inject the resulting structure to a graph cut algorithm to generate an efficient and robust mesh segmentation while considerably reducing the required computation times.

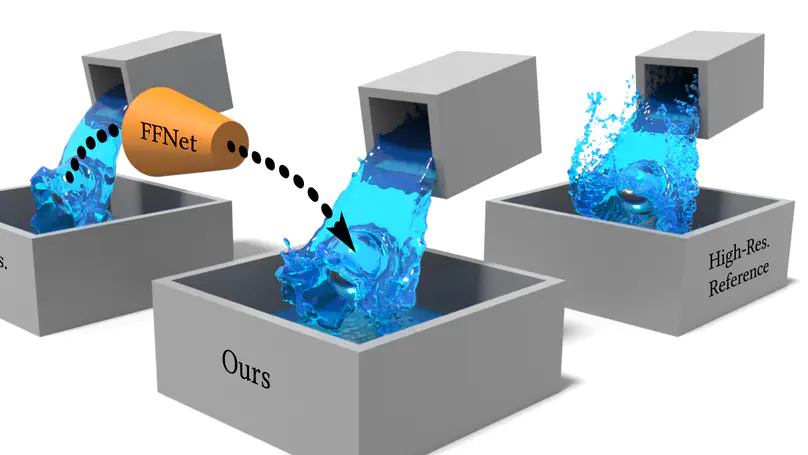

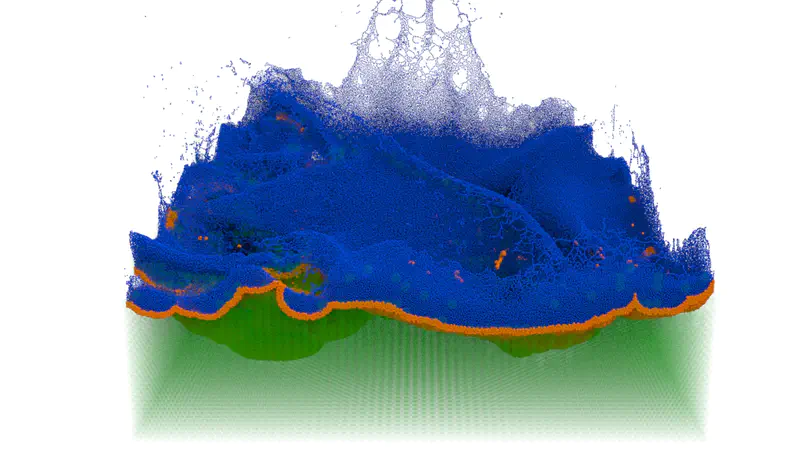

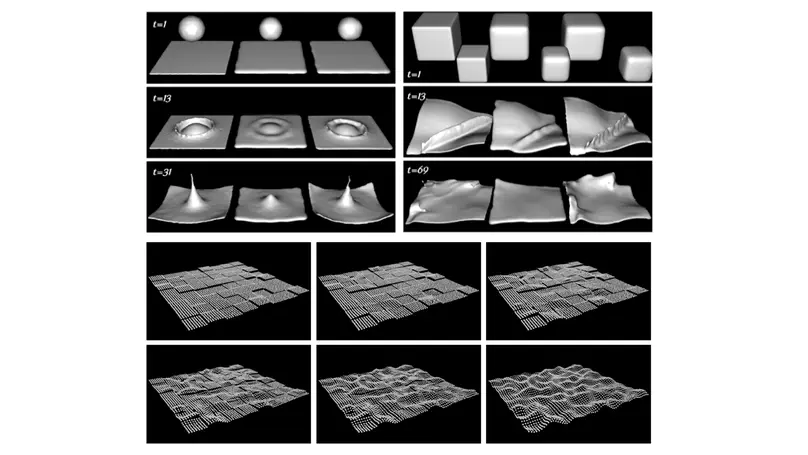

The approximation of natural phenomena such as liquid simulations in computer graphics requires complex methods that are computationally expensive. Despite recent advances in this field, the gap in realism between a simulated liquid and reality remains considerable. This disparity that separates us from the desired realism requires numerical models whose complexity continues to grow. The ultimate goal is to provide users the capacity and tools to manipulate these liquid simulation models to obtain acceptable realism. In the last decade, several approaches have been revisited to simplify and to allow more flexible models. In this dissertation by articles, we present three projects whose contributions support the improvement and flexibility of generating liquid simulations for computer graphics. First, we introduce a hybrid approach allowing us to separately process the volume of non-apparent liquid (i.e., in-depth) and a band of surface particles using the Smoothed Particle Hydrodynamics (SPH) method. We revisit the particle band approach, but this time newly applied to the SPH method, which offers a higher level of realism. Then, as a second project, we propose an approach to improve the level of detail of splashing liquids. By upsampling an existing liquid simulation, our approach is capable of generating realistic splash details through ballistic dynamics. In addition, we propose a wave simulation method to reproduce the interactions between the generated splashes and the quasi-static portions of the existing liquid simulation. Finally, the third project introduces an approach to enhance the apparent resolution of liquids through machine learning. We propose a learning architecture inspired by optical flows by which we generate a correspondence between the displacement of the particles of liquid simulations at different resolutions (i.e., low and high resolutions). Our training model allows high-resolution features to be encoded using pre-computed deformations between two liquids at different resolutions and convolution operations based on the neighborhood of the particles.

We present a novel up-resing technique for generating high-resolution liquids based on scene flow estimation using deep neural networks. Our approach infers and synthesizes small- and large-scale details solely from a low-resolution particle-based liquid simulation. The proposed network leverages neighborhood contributions to encode inherent liquid properties throughout convolutions. We also propose a particle-based approach to interpolate between liquids generated from varying simulation discretizations using a state-of-the-art bidirectional optical flow solver method for fluids in addition with a novel key-event topological alignment constraint. In conjunction with the neighborhood contributions, our loss formulation allows the inference model throughout epochs to reward important differences in regard to significant gaps in simulation discretizations. Even when applied in an untested simulation setup, our approach is able to generate plausible high-resolution details. Using this interpolation approach and the predicted displacements, our approach combines the input liquid properties with the predicted motion to infer semi-Lagrangian advection. We furthermore showcase how the proposed interpolation approach can facilitate generating large simulation datasets with a subset of initial condition parameters.

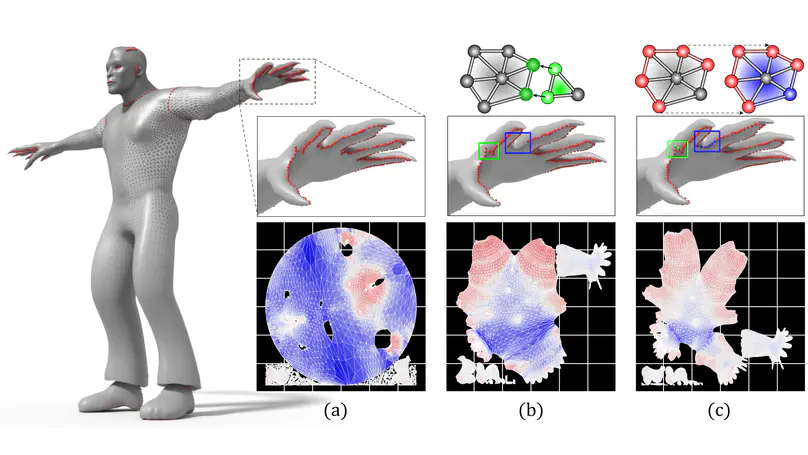

Recently there has been a significant effort to automate UV mapping, the process of mapping 3D-dimensional surfaces to the UV space while minimizing distortion and seam length. Although state-of-the-art methods, Autocuts and OptCuts, addressed this task via energy-minimization approaches, they fail to produce semantic seam styles, an essential factor for professional artists. The recent emergence of Graph Neural Networks (GNNs), and the fact that a mesh can be represented as a particular form of a graph, has opened a new bridge to novel graph learning-based solutions in the computer graphics domain. In this work, we use the power of supervised GNNs for the first time to propose a fully automated UV mapping framework that enables users to replicate their desired seam styles while reducing distortion and seam length. To this end, we provide augmentation and decimation tools to enable artists to create their dataset and train the network to produce their desired seam style. We provide a complementary post-processing approach for reducing the distortion based on graph algorithms to refine low-confidence seam predictions and reduce seam length (or the number of shells in our supervised case) using a skeletonization method.

One embodiment of the present application sets forth a computer-implemented method for generating a set of seam predictions for a three-dimensional (3D) model. The method includes generating, based on the 3D model, one or more representations of the 3D model as inputs for one or more trained machine learning models; generating a set of seam predictions associated with the 3D model by applying the one or more trained machine learning models to the one or more representations of the 3D model, wherein each seam prediction included in the set of seam predictions identifies a different seam along which the 3D model can be cut; and placing one or more seams on the 3D model based on the set of seam predictions. At least one advantage of the disclosed techniques compared to prior approaches is that, unlike prior approaches, the computer system automatically generates seams for a 3D model that account for semantic boundaries and seam location while minimizing distortion and reducing the number of pieces required to preserve the semantic boundaries. In addition, the use of trained machine learning models allows the computer system to generate seams based on learned best practices, i.e. based on unobservable criteria extracted during the machine learning model training process.

Click the Cite button above to demo the feature to enable visitors to import publication metadata into their reference management software. Create your slides in Markdown - click the Slides button to check out the example.

The discretization of fluids can significantly affect computation times of traditional SPH methods. Even if state-of-the-art SPH methods such as divergence-free SPH (DFSPH) produce excellent small-scale details for complex scenarios, simulating a large volume of liquid with these particle-based approaches is still highly expensive considering that only a fraction of the particles will contribute to the visible outcome. This paper introduces a hybrid Eulerian-DFSPH method to reduce the dependency of the number of particles by constraining kernel-based calculations to a narrow band of particles at the liquid surface. A coarse Eulerian grid handles volume conservation and leads to a fast convergence of the pressure forces through a hybrid method. We ensure the stability of the liquid by seeding larger particles (which we call fictitious) in the grid below the band and by advecting them along the SPH particles. These fictitious particles require a two-scale DFSPH model with distinct masses inside and below the band. The significantly heavier fictitious particles are used to correct the density and reduce its fluctuation inside the liquid. Our approach effectively preserves small-scale surface details over large bodies of liquid, but at a fraction of the computation cost required by an equivalent reference high-resolution SPH simulation.

In computer graphics, modeling natural phenomena, such as fluids, is a complex task and requires significant computation time. Moreover, adding small details in fluid simulations, such as surface turbulence, is an active research topic in the field of visual effects. Our interest in this research is to preserve those small details, especialy at the free surface of the fluid. The discretization of these phenomena requires millions of particles in order to achieve a result similar to the exact solution. However, simulating such a large number of particles is a time consuming process. Besides, the behaviour of a fluid simulation is highly dependent on the number of particles. In addition, it is sometimes difficult to obtain the desired result with such simulations. Some important features may become visible by simply increasing the number of particles of the simulation. The kinetic energy and turbulence forces are also important factors that can influence the behaviour of the fluid. The proposed approach in this master’s thesis aims to recreate the surface appearance of a high-resolution fluid based on low- and high-resolution fluid examples from a dictionary learning method. The learning process is made with a coupled dictionary which is generated from concatenated dictionaries. All dictionaries are used to establish a correspondence between the surface geometric features and physical fluid properties from learned examples. These examples are represented by patches of the fluid surface. Our method considers these geometric patches as height fields. The energy spectrum and vorticity forces are also evaluated per patch in order to preserve the observed physical properties of the fluid. Reconstructing new patches is formulated as an optimization problem with geometric and physical constraints. The minimization result is a linear combination of the dictionary atoms. This weighted sparse vector is used to generate a high-resolution representation of each patch of the input low-resolution fluid. In some cases, a spatial term must be added to force the patch borders to be aligned with their neighbors. This approach allows to model the overall appearance of the high-resolution fluid surface with only a few thousand particles. Moreover, this process is done in a fraction of the computation time required to generate the high-resolution fluid. Despite the fact that the objectives are not the same as with vortex particle methods, our approach can be used together with these to generate more realistic and low-cost high-resolution fluid.